Artificial Intelligence (AI) is changing our world in lots of ways. In fact, you interacted with AI without even realizing it. From virtual assistants such as Siri and website chatbots to product recommendations on Amazon, AI is becoming a major part of everyday life. But what exactly is artificial intelligence, and how does it work? What are its limitations and dangers? More importantly, what's in place to ensure that AI benefits us instead of causing harm? This beginner's guide will demystify AI and provide a basic understanding of its capabilities and limitations. It will also provide an outlook on AI’s future.

What is AI?

Before we dive in, let's get the most general question out of the way. AI refers to computer systems or machines designed to perform tasks that would otherwise require human intelligence. To learn how to apply these key concepts and tools can apply directly to your business, check out Introduction to Artificial Intelligence (AI). These systems can analyze data, recognize patterns, learn from experience, and make informed decisions based on data.

A key distinction between the AI we see in science fiction and the AI that works behind many of our apps and tools are the concepts of narrow (or weak) AI and Artificial General Intelligence (AGI) (or strong) AI.

What is AGI in AI?

To best understand AGI, first consider the current AI, Narrow AI, and consider that AGI is still theoretical. So, our expectations for AGI illustrate what current AI still cannot do.

Narrow AI focuses on performing a single task extremely well, such as playing chess, translating languages, or recognizing images. Narrow AI is considered weak because it can only perform within specific parameters. This type of AI exists today and can outperform humans in many tasks.

Artificial General Intelligence (AGI) is a hypothetical AI system exhibiting human-level intelligence and capabilities across various domains. This type of versatile AI is theorized to be able to adapt to changing surroundings and conditions and be as effective as humans in problem-solving. AGI is yet to exist. To learn and think like a human, AGI would require self-awareness and consciousness, which raises an entire field of questions. We would need significant leaps in AGI (and hopefully maturity as a collection of societies) before Star Trek's Data or Iron Man's Jarvis becomes a reality.

How Does AI Work?

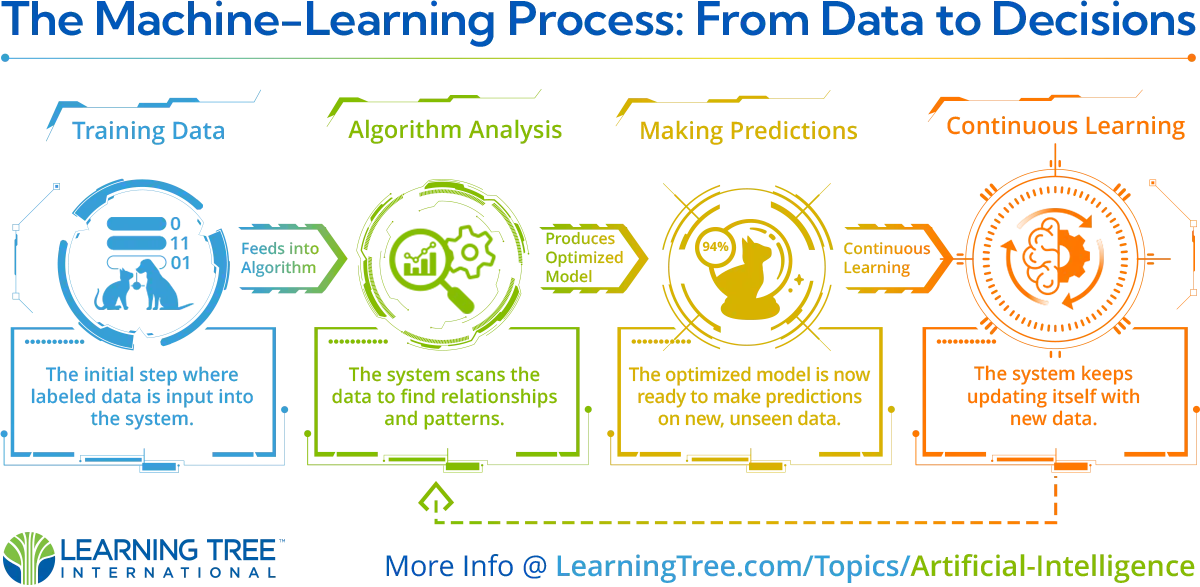

Traditional programming involves feeding a computer explicit step-by-step instructions, but machine-learning takes a different approach - allowing computers to learn from data without being explicitly programmed.

Let's take a quick overview of how it works:

- Training data is fed into a machine-learning algorithm. This data trains the system, e.g., labeled images to teach a system to recognize cats.

- The algorithm analyzes the data to find patterns and relationships, adjusting its internal parameters to optimize pattern detection.

- Now that it's optimized, the model can predict or decide things using new, unseen data.

- Over time, the system continues to learn from new data to improve its model.

This iterative approach allows machines to learn and improve without explicit programming. Neural networks, illustrating elements of human brain functionality, are adept at discerning patterns and distinct features within data. Notably, deep learning—a specialized sub-field of machine-learning—has gained considerable recognition and progress, propelled by the advent of vast computational resources. The enhancements in both neural networks and machine-learning are pivotal contributors to the recent rise of AI applications. Such networks empower machines to emulate human decision-making processes.

What can AI do nowadays, and where are its limits?

AI, trained in an existing library of content, excels at tasks we struggle with and can execute multiple tasks with unorganized data. It can do things like pattern recognition in data, make predictions and forecasts, create highly detailed and realistic images from text prompts, or solve complicated math problems from a photo. AI is all around us, often working quietly in the background to enhance our experience.

How is AI helpful?

- Virtual assistants like Siri, Alexa and Google Assistant understand verbal commands and questions.

- AI powers product recommendations on Amazon and Netflix to suggest purchases and shows based on your browsing history.

- AI aids doctors by analyzing medical images to detect tumors, abnormalities, and diseases.

- AI can replace mundane tasks that used to be obstacles for people, like drafting appeals letters to insurance claims denials

- AI can help workers analyze pay equity within workforces or across similar industries.

- Chatbots provide customer service through conversational interfaces.

- AI tools offer thorough and fast analytics of complicated data, including opposition research in a competitive marketplace.

What are some disadvantages?

- Lack of generalized intelligence of humans.

- Lack of common sense that most humans develop by 11 months of age.

- Autonomous vehicles’ ability to use environmental sensors and AI to navigate without human input has been overstated with significant consequences.

- Susceptibility to bad training data.

- Reliance on large training datasets of new data.

While narrow AI can outperform humans for most specialized tasks, generalized intelligence across different domains remains elusive. For example, try asking AI to write a movie script. Without human guidance or context, AI can only understand that actions have outcomes, and those outcomes are only associated with groups of words, not consequences.

Dangers of AI, Fairness, and Model-Collapse

While AI has the potential to provide immense value and serve previously underserved populations, it also faces unique challenges. For instance, if a large language model is trained on flawed or incomplete data, its outputs can become skewed, leaving it up to the user to detect the inaccuracies. Additionally, when AI relies on content generated by itself or other AI systems, it can lead to degraded results over time, a phenomenon known as model collapse. Furthermore, model drift occurs when an AI model becomes less accurate over time as the world evolves, but the model does not adapt. These challenges can be addressed with careful design and oversight.

What causes unfairness in machine learning?

Unfairness in machine learning happens when the algorithm produces inaccurate or incorrect results due to poor assumptions made during the process. These issues can arise from the algorithm’s design, how the data sample is collected, or societal systems influencing the data and model.

- A common issue early in the process is Sample Limitations. If the sample is too small or unrepresentative, the AI won’t properly learn how the real world operates.

- Incorrect Data stems from societal inequalities and flawed real-world data, leading to inaccurate outcomes. The source data may reflect harmful stereotypes or exclude key groups.

- Exclusion Issues occur when important data is left out because the modeler doesn’t recognize it as significant or fails to account for outliers.

- Algorithmic Imbalances are built into the model during the coding process, prioritizing certain results over others. While this can sometimes be beneficial—such as ensuring AI cannot promote violence or hate speech—it can also create unintended consequences in other applications.

How to ensure fairness in algorithms?

- The Power of Awareness: Recognizing and understanding the underlying imbalances within our AI systems is the first essential step towards creating more sound and precise AI solutions.

- Intervention in Action: We should take a multi-faceted approach to address these challenges actively. This includes collecting data from a variety of sources, inviting third-party experts for impartial audits, and gathering valuable insights from the wider community.

- Treading the Regulatory Path: Interestingly, some design choices, particularly Algorithmic Decisions, might be intentionally implemented as a safeguard for society's greater good. However, it's crucial to be mindful and fully understand the broader implications of such decisions.

What is model collapse?

Model collapse can happen quickly, sometimes as early as the second or third round of model training. It happens when a machine-learning model starts learning from other AI outputs instead of real human data, making the results less reliable. Think of it like making copies of a picture until you can't recognize the original anymore. Eventually, the first-Career Stages learning data is purged and replaced with blurry copies to make new Career Stages.

What is model drift?

Model drift is an intriguing phenomenon that affects the accuracy of AI systems. Let's dive into a few reasons why this might happen:

- Environmental Shifts: Just as changing seasons can alter the landscape, shifts in the environment can impact a model's performance. Imagine an AI trained to predict the weather. If there's a significant change in the climate over the years, our trusty AI might not be as spot-on with its forecasts.

- Evolving Relationships: Over time, the dynamics between certain variables may evolve - a phenomenon known as concept drift. Consider an AI model gauging consumer product demand. Our model's predictions may not align with actual outcomes if societal trends alter buying behaviors.

- Data Collection Modifications: Sometimes, the method of collecting data or its unit might change, causing a model to misinterpret information. For instance, if a system learns from weight data in kilograms and suddenly receives input in grams, there's bound to be some confusion.

Think of how a cherished photo of your young pet might not resemble them as they grow older. Similarly, a model can stray from accuracy if the variables it was trained on evolve. To maintain reliability, continuous updates and refinements are essential in AI models.

The Future of AI

Experts predict that AI capabilities will continue to advance rapidly and will make breakthroughs in a wide range of areas.

Some high-growth areas include:

- Increased human-like reasoning and problem-solving.

- Personalized education and healthcare.

- Further automation of routine physical and cognitive tasks.

- Integration of AI in more everyday objects through the Internet of Things.

However, with significant advancement in AI comes greater risk:

- Job disruption and effects on employment.

- Lack of transparency in AI decision-making.

- Data privacy and cybersecurity vulnerabilities.

- Exacerbation of existing imbalance.

Experts believe these risks will require proactive governance to craft policies that maximize the benefits of AI while minimizing downsides.

The Takeaway

This introduction covered essential aspects of artificial intelligence. While the technology has enormous transformative potential for individuals and organizations, it is still in its early stages of development. Artificial intelligence rapidly develops and provides opportunities in many areas, including business, science, and everyday life. While the long-term outcomes are uncertain, understanding the basics of AI will allow you to grasp its future implications better.

Explore ways to use the tools to imagine new ways to perform in your role. I recommend looking at Learning Tree's ChatGPT for Business Users: On-Demand if you're just starting to explore or AI Skills for Business Professionals Course if you're already been using AI as a solution in business. And in doing so, you can learn how to make AI work for you, not against you.