Many of you must follow formal cybersecurity requirements. PCI DSS, if you accept credit or debit cards. HIPAA, if you store or process health care data.

Then, if you're with the Department of Defense or other U.S. Government agencies, there are more detailed configuration requirements.

In theory, you could just read the requirements and then manually check a server. But consider the amount of work for one server. Then multiply that by the number of servers, and it quickly becomes impractical!

How can we make this practical?

Use a Scanner

Learning Tree's introductory cybersecurity course describes vulnerability scanners. These programs automatically examine a system and then tell you what's wrong. Some of them can do mitigation. They can automatically fix some of the problems.

But wait. How does it decide what "wrong" really means?

Versions and Patches

The most common tools look for missing software patches. The software first contacts headquarters for your operating system. It downloads a list of the latest version numbers for everything included with that operating system. Then it goes through the local package database, looking for installed versions that aren't the latest.

In Learning Tree's introductory cybersecurity course you use the Microsoft Baseline Security Analyzer to scan the Windows workstation you use in the labs.

Every operating system has its own version. Linux uses commands, yum and apt, and of course there are graphical wrappers around those. On my Mint Linux laptop, a little blue shield appears in the task bar. That tells me that its hourly check showed that updates are available.

But the thing is, those scanners come with the operating system, and so they only check the obvious components. If I add other software, or if I install things in unusual locations, those scanners won't know to check.

Additionally, they only check patches. My system might have some flawed software, but the provider hasn't provided a patch yet.

This leads us to...

Vulnerability Scanners

These are tools from third parties. They can check specific operating systems. Additionally, they check other optional packages.

They will give us the list of all the problems they knew how to find. That covers more risks than just the list of missing patches.

Examples of these tools include Nessus, its free fork OpenVAS (my preferred tool), Retina, and others. Learning Tree's vulnerability assessment course lets you test-drive multiple tools.

These tools overlap. However, they all miss something that another one spots. Therefore, it's good to run multiple tools.

Remember, though, that these tools just check versions of operating systems and other software. You could use the most trustworthy software. But nothing prevents poor configuration choices.

We need something to also test configurations.

So, this leads to configuration analysis.

Configuration Analysis

Now the tools become scarce.

Why? There should be a big market for tools that automatically find and fix security problems.

Yes, that's right. But this is a very difficult problem to solve.

First, because today's environment of an operating system plus added service and application software becomes enormously complicated.

Second, because everyone has their own idea of precisely what "secure" and "appropriate" mean.

Linux distributions come with tools that can be used during the installation, or afterward. However, they are rather granular, typically allowing you to choose between three or four security levels.

Microsoft has provided a Best Practices Scanner for their Server products.

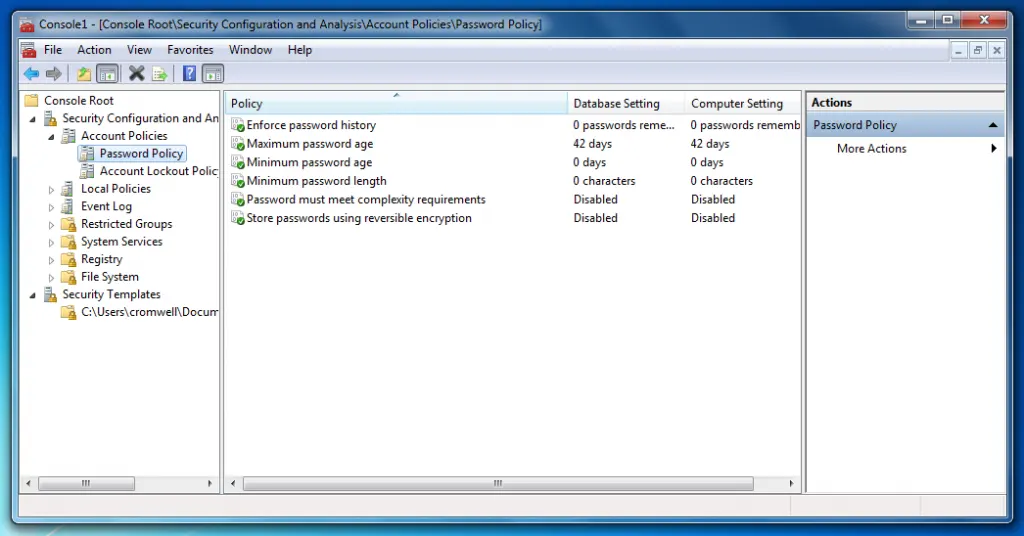

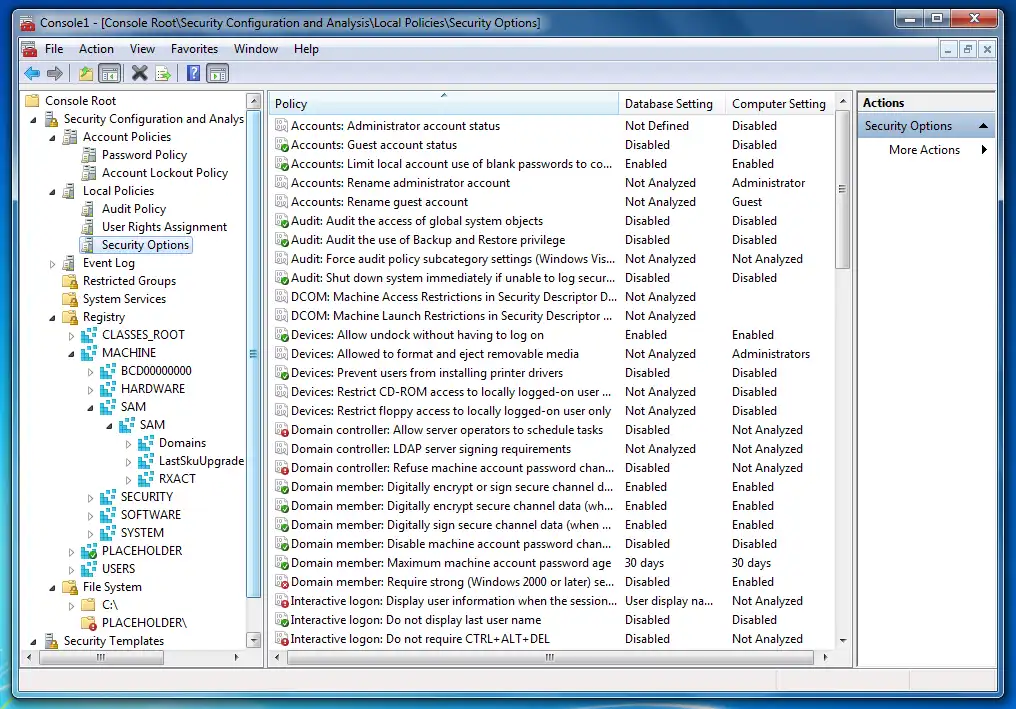

Learning Tree's introductory cybersecurity course has you run the Windows Security Configuration and Analysis Snap-In for the MMC, the Microsoft Management Console.

It's very helpful for applying changes, for enforcing an existing security policy. However, it isn't very helpful for designing that complex configuration.

Logic and Limitations

You have to be careful. These tools aren't perfect in what they do, or how they report it.

"I know that I know nothing." That's the "Socratic paradox", and it applies here. These tools will tell us something. However, there are things that they don't test, and we don't even know what those things are.

I recently used a tool that the U.S. Department of Defense created to test Linux server security. It failed in some interesting ways.

One DoD criteria is that all system files must be exactly as supplied from the provider. No changes at all. The scanning software failed to understand the package management system. Therefore, it could not apply this test. However, it did not flag this as a failure. Instead, it very subtly mentioned that this one test was "inapplicable".

I would call that a dangerous false negative error. There was a big problem, caused by the scanner. A false negative gives you a false sense of security. This is very dangerous.

Then it followed that with a blizzard of false positive errors. It couldn't read the package database, therefore it couldn't tell that I hadn't installed several antiquated and dangerous remote-access services. So there were many reports that I hadn't explicitly disabled telnet, and rsh, and rlogin, and rcp, and so on.

Expertise is Needed

So, we have some tools. But they have limits. There's no all-knowing, all-capable Jeeves to do this for us. An important computer system needs a careful, knowledgeable person. You must analyze the system and its environment. Then consider the risks and the desired goals. And finally, make the best decisions, and carry them out.

The Promise of SCAP Tools

U.S. Government settings, especially in the Department of Defense, have much more constrained rules. Automated testing and mitigation become practical.

Some recently developed tools let us automate hardening, if we have very specific goals in mind. Come back next week, and I'll describe what I've been doing with this technology on a consulting project.